⛽: Equal contribution

Baohao Liao, Shahram Khadivi, Sanjika Hewavitharana

Baohao Liao, Shahram Khadivi, Sanjika Hewavitharana

[Under review of WMT21]

[Paper]

[Paper]

Yingbo Gao⛽, Baohao Liao⛽, Hermann Ney

Yingbo Gao⛽, Baohao Liao⛽, Hermann Ney

[COLING (2020)] [BibTeX] [Code]

Baohao Liao, Yingbo Gao, Hermann Ney

Baohao Liao, Yingbo Gao, Hermann Ney

[Findings of EMNLP (2020)] [BibTeX]

Mathis Bode, Michael Gauding, Jens Henrik Göbbert, Baohao Liao, Jenia Jitsev, Heinz Pitsch

[International Conference on High Performance Computing (2018) ] [BibTeX]

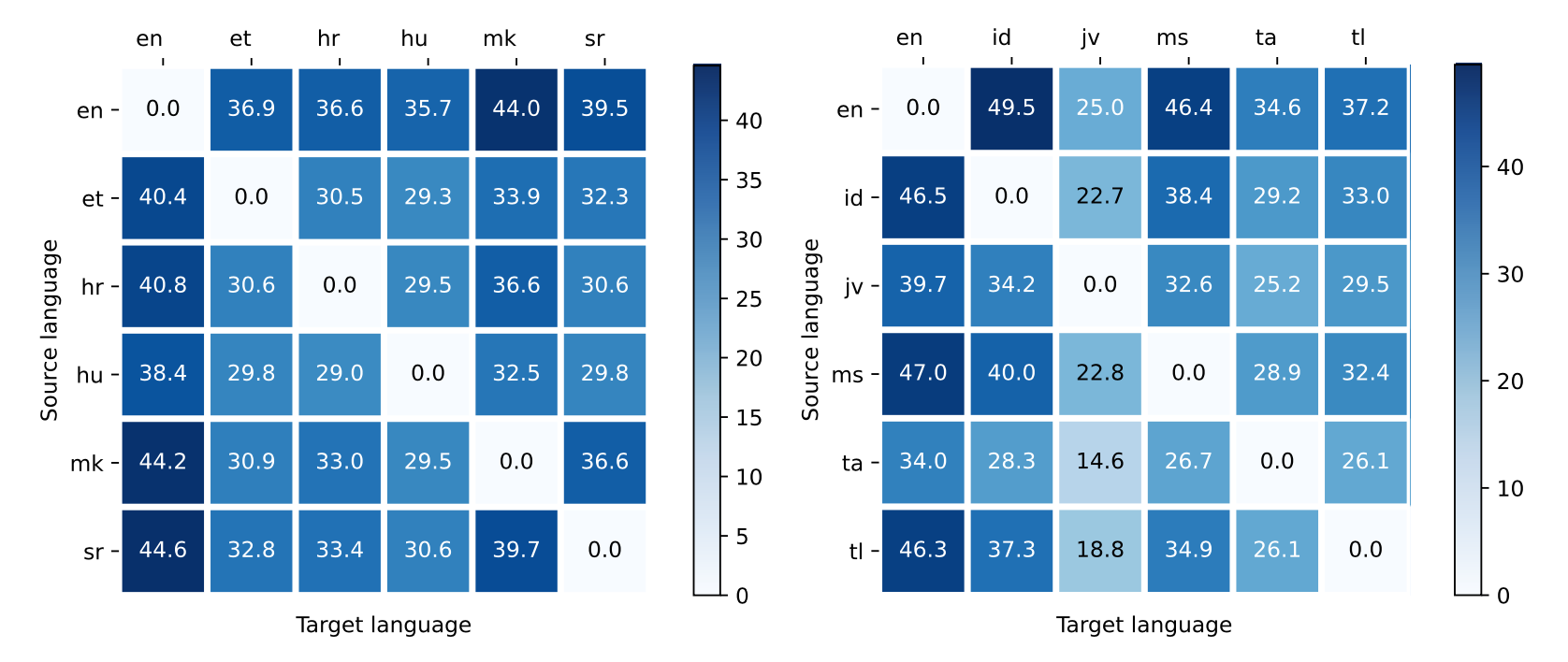

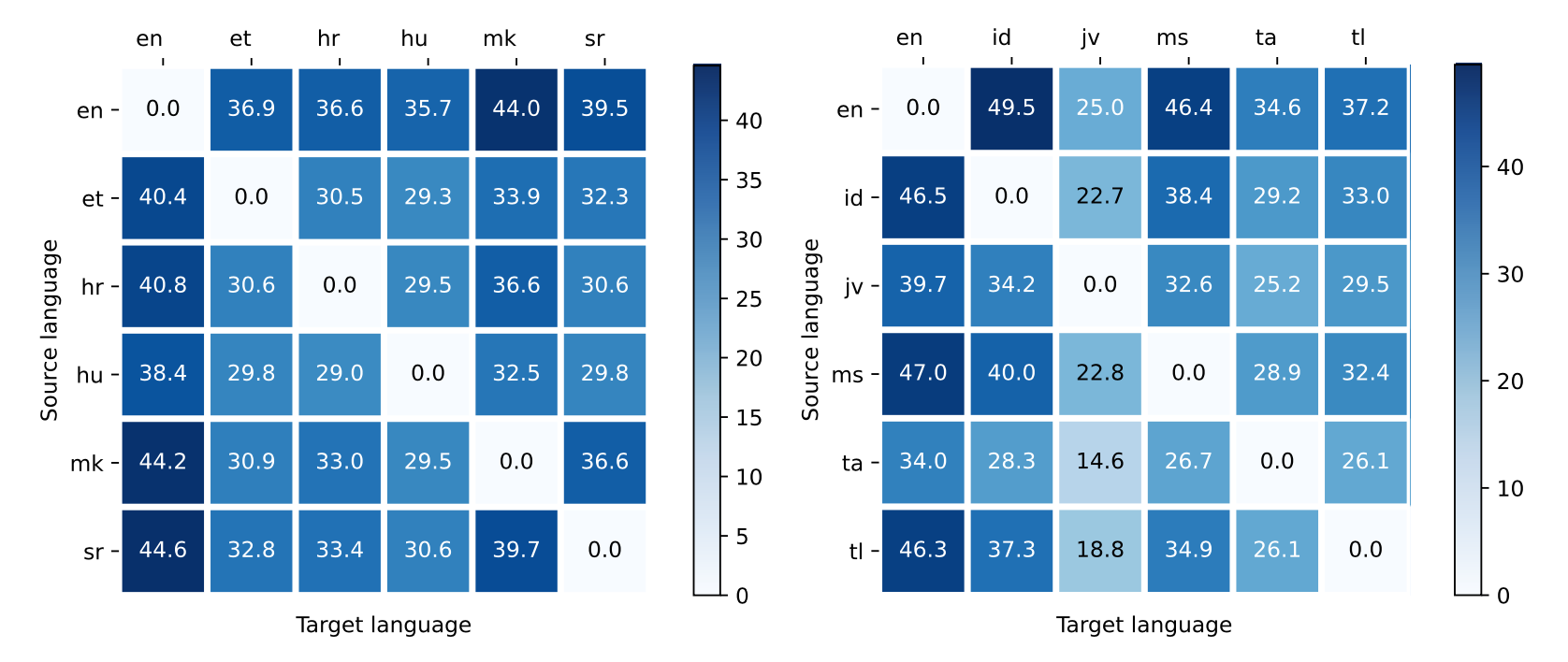

Back-translation for Large-Scale Multilingual Machine Translation

We extend the exploration of back-translation from bilingual to multilingual and have different findings.

[Under review of WMT21]

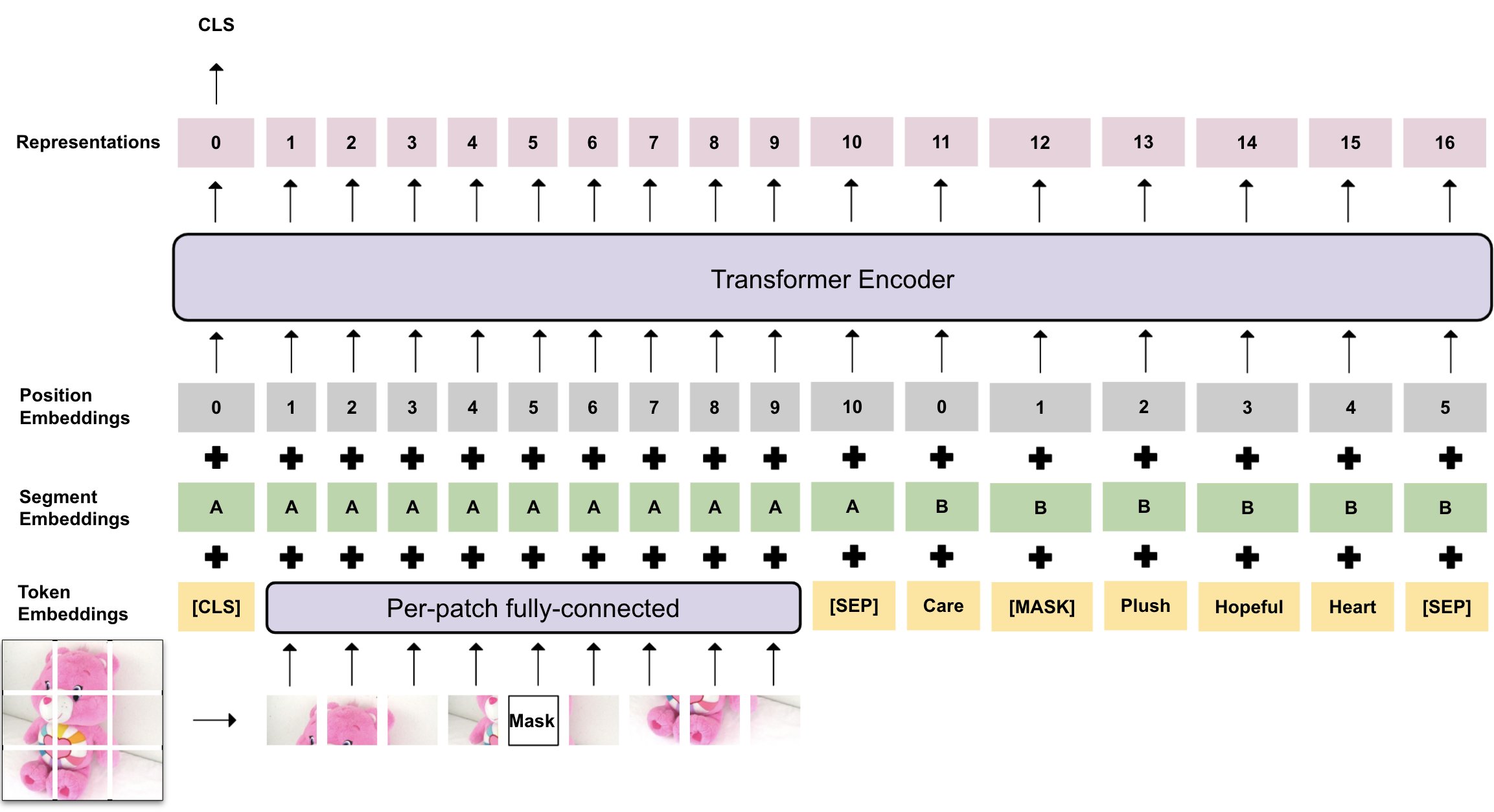

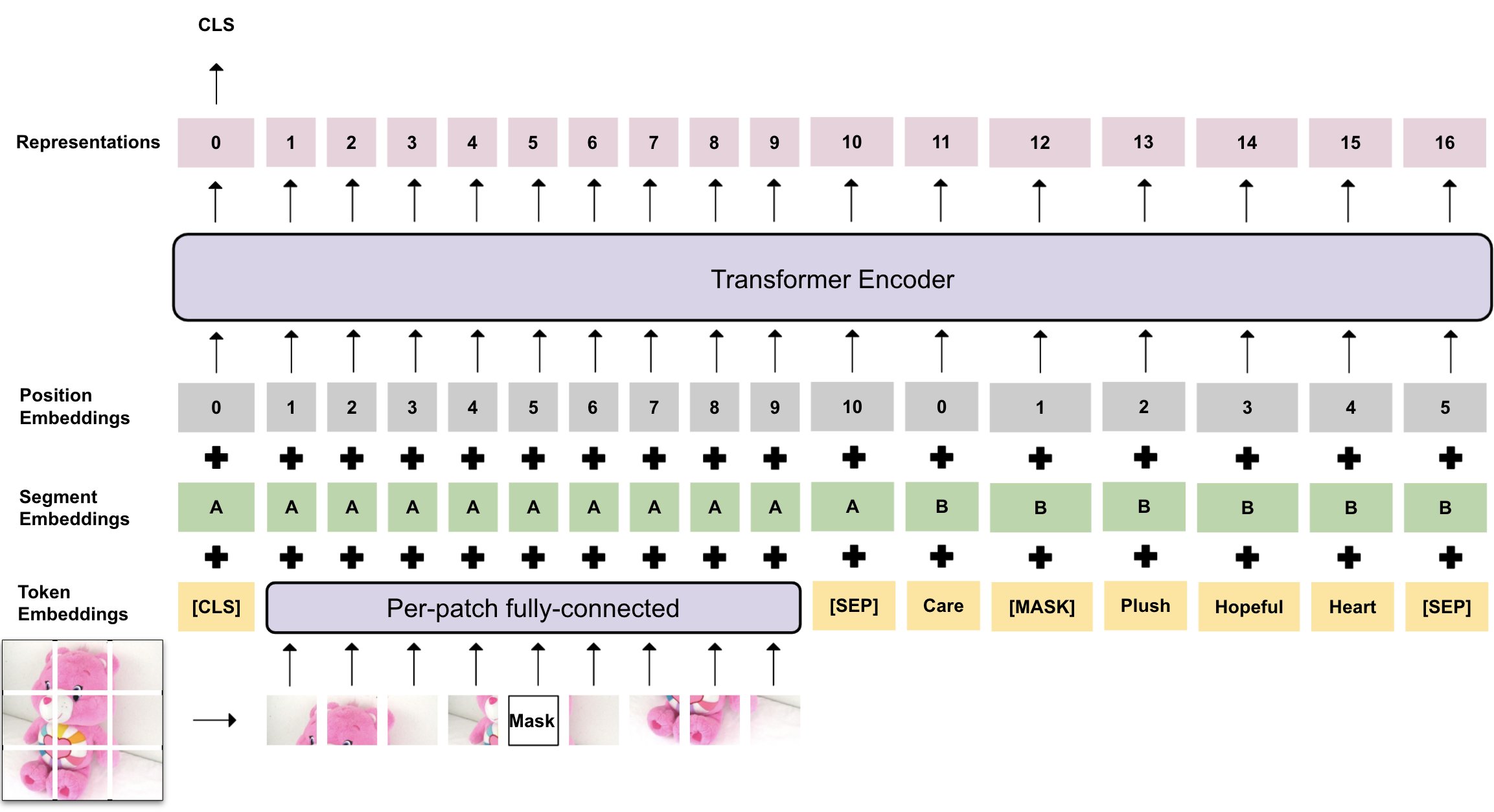

How to Attend to Different Modalities Equally: Self-supervised Learning for Multimodal Product Embedding

We introduce a BERT-variant model and new training objectives to learn multimodal representation for eProduct.

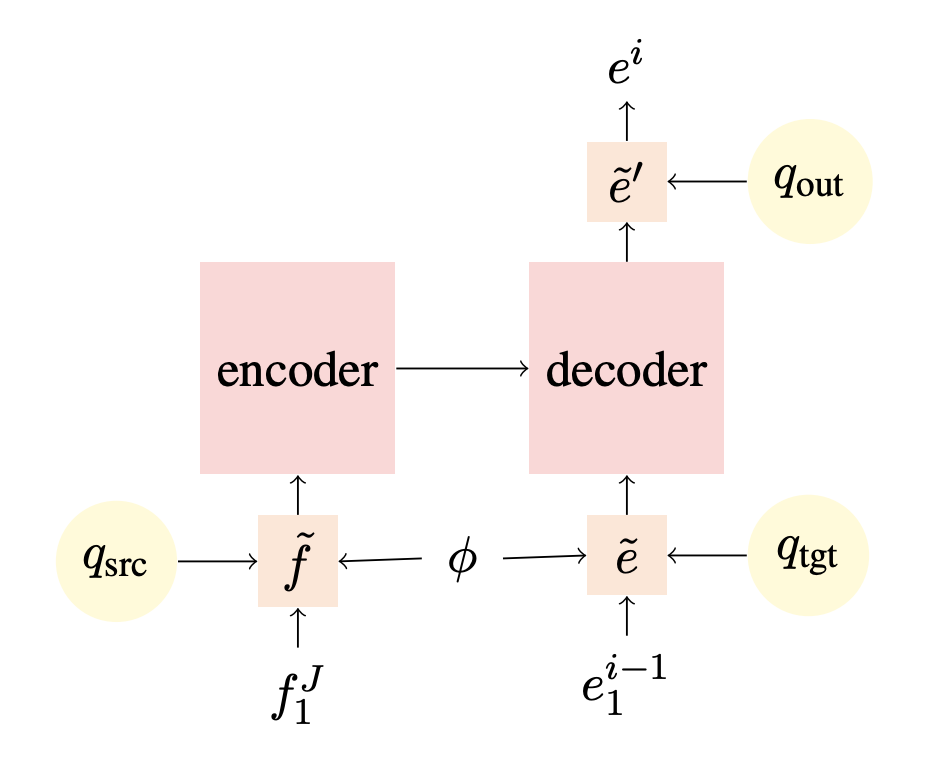

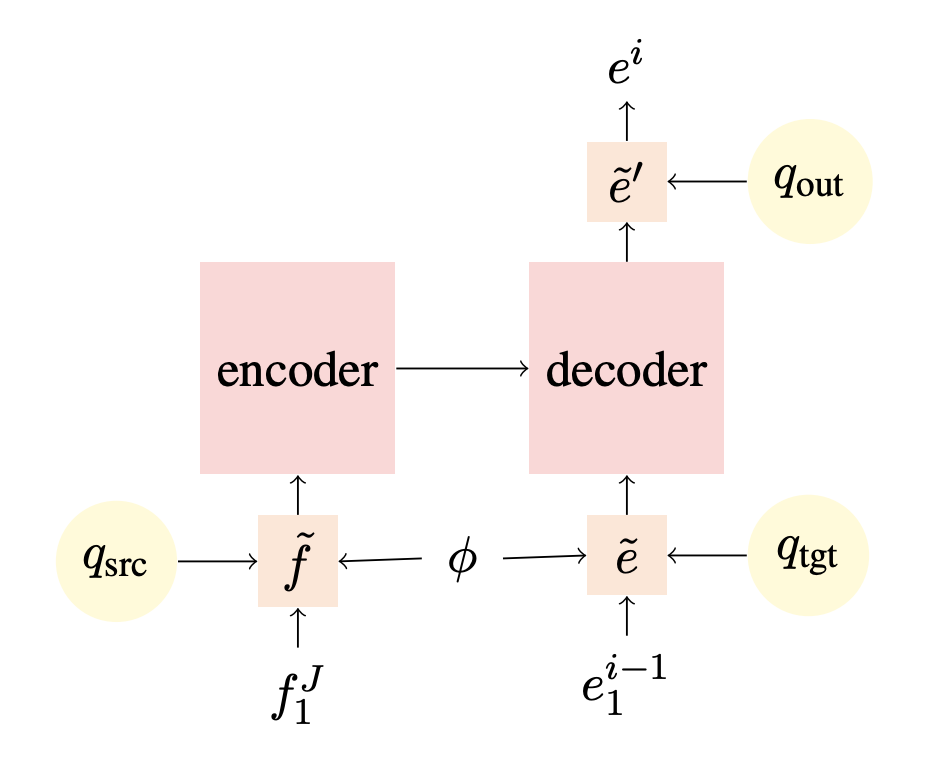

Unifying Input and Output Smoothing in Neural Machine Translation

We study how to best combine label smoothing and soft contextualized data augmentation, and stack their improvements for neural machine translation.

[COLING (2020)] [BibTeX] [Code]

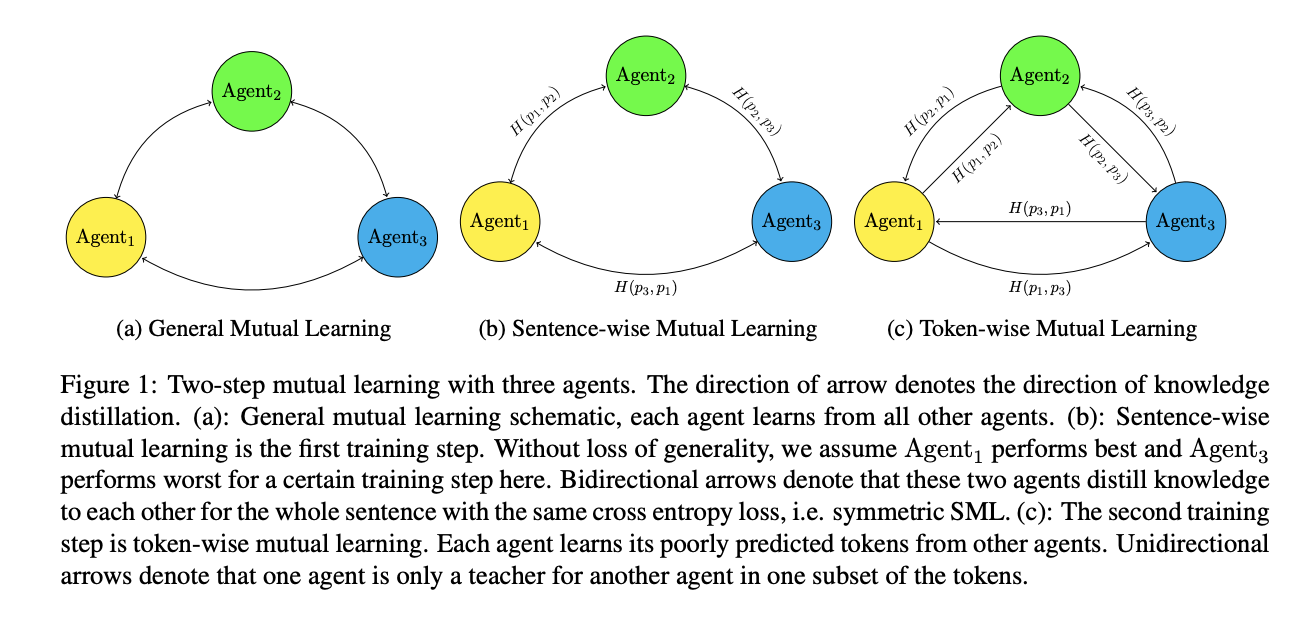

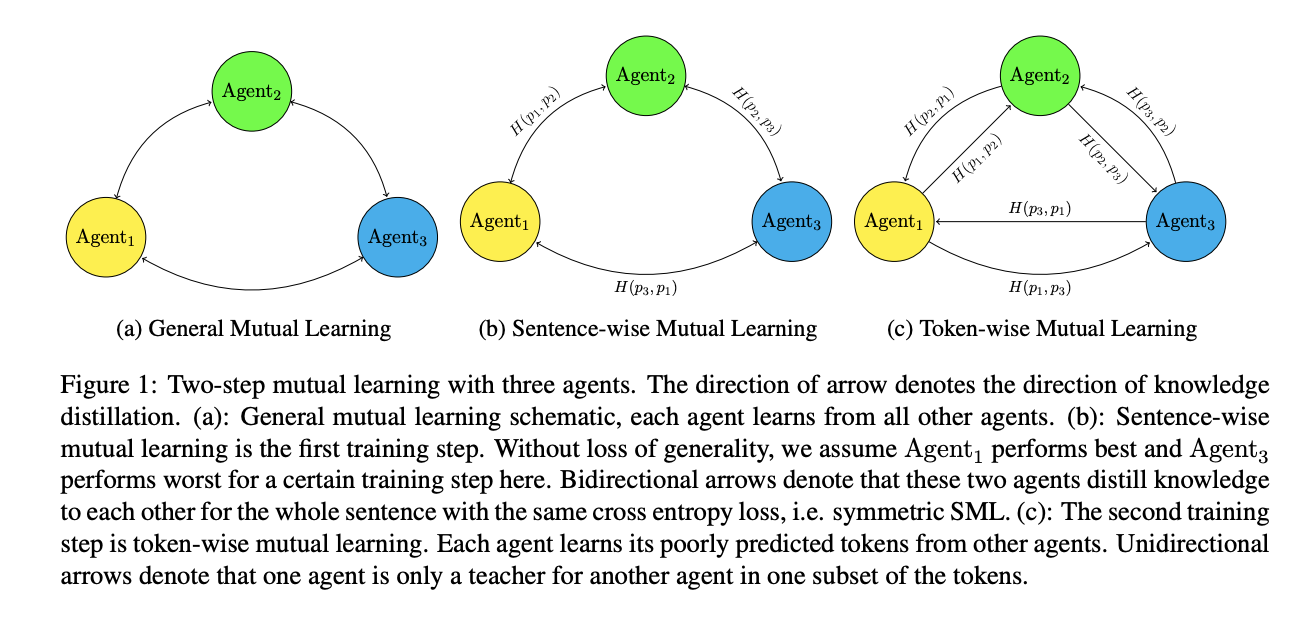

Multi-Agent Mutual Learning at Sentence-Level and Token-Level for Neural Machine Translation

Alternative to teacher-student learning, our models distill knowledge equally at both sentence level and token level for neural machine translation.

[Findings of EMNLP (2020)] [BibTeX]

Towards Prediction of Turbulent Flows at High Reynolds Numbers Using High Performance Computing Data and Deep Learning

We apply different GAN techniques to generate 3D turbulent flows, and analyze their physical properties.

Mathis Bode, Michael Gauding, Jens Henrik Göbbert, Baohao Liao, Jenia Jitsev, Heinz Pitsch

[International Conference on High Performance Computing (2018) ] [BibTeX]